Gesture Selection for Human-Robot Interaction

While automation has provided many benefits in manufacturing, the necessity of human mechanics has persisted. Work in limited-access areas, such as the inside of an airplane wing, mechanics have to reach into the working space with a single hand but cannot see within the space. This forces the mechanics to work by touch and experience, and results in slow and inefficient work. A camera placed within the enclosed area can provide significant benefit to baseline methods, such as using touch to guide the mechanic, or mirrors used as a vision aid. Using hand tracking, a robotic assistant can track and display the work area local to the hand to the mechanic, however it is necessary to have a method to communicate the desired view changes to the robot assistant. Further this method should require no physical contact between the mechanic and the robot so that the mechanic can control the position without needing to set down tools or exit the space while completing the work. Because the only input to the robotic gesture recognition system is a continuously tracked hand, there is potential for confusion between the various motions made by the mechanic while working and motions intended to communicate control. It is possible to develop a metric to distinguish a gesture made by a mechanic that is made to control the camera from any random hand movement made by the mechanic. When this metric is maximized, the recognition of a specific controlling gesture can be improved to show meaningful results.

Method

A LEAP Motion hand tracking device is used to track the position of the hand and fingers of the mechanic. Yet, in order to develop effective gesture-based communication between the robot assistant and a mechanic, the robot must be able to tell the difference between a gesture meant to control the pose or view of the robot, and any other gesture made by the mechanic. In doing this, “start” and “stop” gestures are designated so when they are observed by the robot, the robot knows to look out for more complex gestures that signify the mechanic wanting a new view angle.

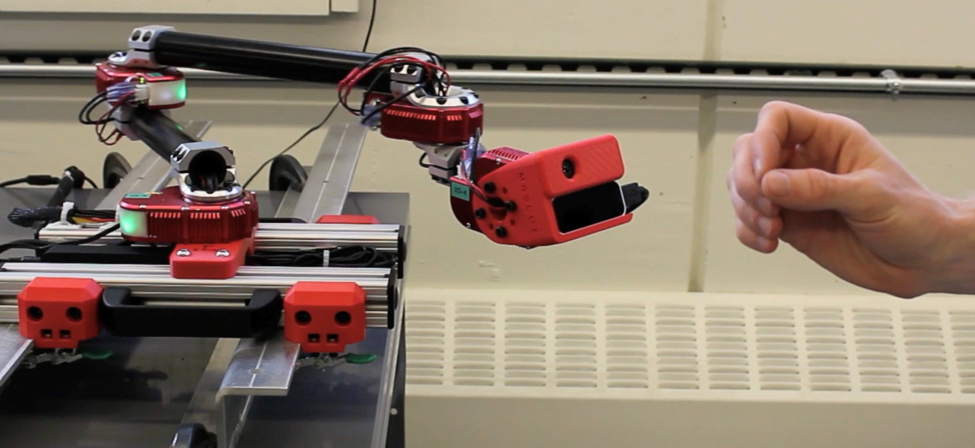

Robotic visual assistance platform with LEAP Motion hand tracking device (at center).

Experimentation

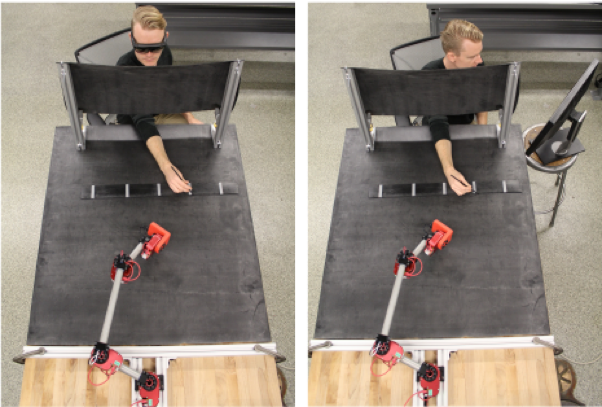

To test the tracking and gesture control, various work tasks are simulated in an environment where the test subject cannot see directly into the work space, but rather views the work space using a monitor with camera feed from a robotic assistant or by using augmented reality glasses. The test conducted in the figure was a mock task which stopped at intervals to perform a gesture that the robot is expected to recognize.

Experiment done using augmented reality glasses (left) and a display monitor (right) to view the camera feed.

Instead of executing a control action, the robot instead records how confident it would be in performing the action based on the hand gesture made, which is then evaluated post-experiment by our engineers.

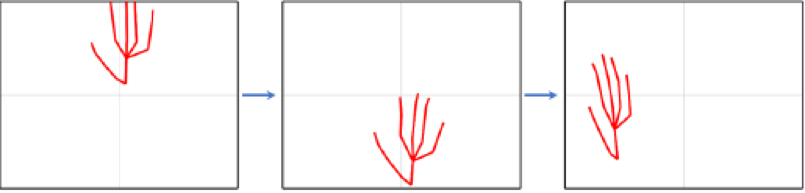

What the motion sensor would see as a mechanic moves its hand.

Related Publication

R. Hendrix, P. Owan, J. Garbini and S. Devasia. “Context-Specific Separable Gesture Selection for Control of a Robotic Manufacturing Assistant,” 2nd IFAC Conference on Cyber-Physical & Human-Systems (CPHS’18), Dec. 14-15, Miami, FL, USA.

Researchers Involved

Rose Hendrix, Parker Owan, Joe Garbini and Santosh Devasia